Machine Learning–Driven Design of Experiments (ML-DOE): The Efficient Experimentation

Introduction

What Is DOE and Why Does It Matter Today?

Design of Experiments (DOE) has been a cornerstone of scientific and industrial optimization for decades. It enables researchers and engineers to systematically understand how multiple process variables influence outcomes such as yield, quality, performance, and stability. Classical DOE methods have provided a reliable framework for exploring systems where several factors interact.

However, modern processes are becoming increasingly complex. Many involve nonlinear behaviours, high-dimensional parameter spaces, and experiments that are costly or time-consuming. In these situations, running large DOE matrices becomes impractical and inefficient. Organizations need faster, more adaptive methods that can intelligently guide experimentation without draining resources.

This need has given rise to a powerful new approach: Machine Learning Driven Design of Experiments (ML-DOE).

What Is ML-DOE? A Smarter, Adaptive Evolution of DOE

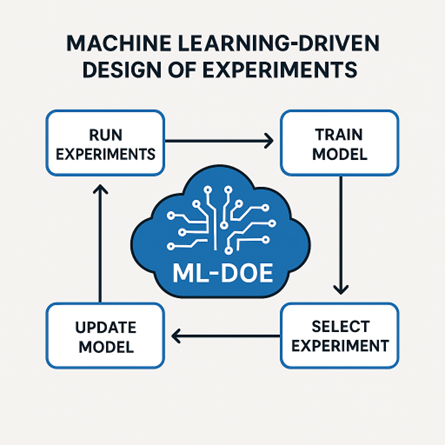

Machine Learning Driven DOE represents a fundamental shift in how experimentation is carried out. Instead of relying on static, pre-planned experiment grids, ML-DOE uses machine learning models to guide experimentation dynamically. Every experiment becomes a learning opportunity, and the model continuously updates its understanding of the system.

This creates a self-improving feedback loop:

- Run a small number of experiments

- Train a model on the results

- Predict performance across the design space

- Select the next most informative experiment

- Update the model with new data

- Iterate until convergence

Unlike classical DOE, which assumes relationships upfront, ML-DOE allows the system to reveal its own behaviour. It can identify interactions, nonlinearities, and hidden trends that traditional designs struggle to capture. This makes ML-DOE especially powerful for modern R&D challenges where complexity is the norm rather than the exception. Where classical DOE tells you how to plan experiments, ML-DOE tells you what to test next based on real-time learning.

Indeed, this hybrid (DOE + ML) approach is gaining traction. As reported in a recent review, there is a growing trend to combine DOE with ML, often using sequential “active learning” strategies to suggest new experiments dynamically [1].

How ML-DOE Works: A Practical, Operator-Friendly Workflow?

ML-DOE follows a workflow that feels natural to engineers, scientists, and process experts, but adds intelligent decision-making at each step.

- Define Input Parameters (X) and Their Ranges

The user begins by listing the adjustable variables in the process and specifying the allowable range for each. These could include temperature, pH, feed rates, mixing speeds, catalyst loads, formulation ratios, machine speeds, or any combination of controllable factors. This defines the exploration space for the model.

- Define the Output (Y) and Optimization Objective

Next, the response variable is defined as yield, hardness, purity, growth, throughput, conversion, stability, or any measurable metric. The objective is then set: maximize, minimize, or reach a target range.

- ML-DOE Suggests an Initial Set of Experiments

Instead of proposing a large grid, ML-DOE suggests a small number of strategically diverse experiments, often between 5 and 10. These provide broad coverage of the design space and give the model enough information to start learning.

- Operator Runs Experiments and Inputs Results

The operator performs the recommended trials and returns the measured outputs. These real-world data points are used to update and refine the model.

- ML-DOE Suggests the Next Best Experiments

The model identifies promising regions, areas with high uncertainty, or gaps in current knowledge. It then recommends the next set of experiments that offer maximum information gain or the highest optimization potential. This ensures every new experiment is meaningful, not wasteful. Such adaptive experimental design strategies where ML progressively guides experiment selection have been shown to drastically reduce the number of experiments needed [2][3].

- Iterate Until Convergence

This loop, suggest, run, learn continues until the system reaches stable, optimal conditions or the desired confidence level. By the end, the user has:

- Optimized parameter settings

- A learned digital model of the process

- Insight into how variables interact

- Predictive capability for future scenarios

ML-DOE turns experimentation into an intelligent, focused, and highly efficient process.

Figure 1: User workflow cycle

Can ML-DOE Use Existing Historical Data?

One of the most practical strengths of ML-DOE is that it can begin with data you already have. Many organizations possess years of historical experiments, archived process data, past DOE studies, and pilot-scale runs. ML-DOE can ingest this information as its initial training set, allowing the model to start with built-in knowledge rather than a blank slate.

Reviews highlight that ML can effectively analyze “costly to collect or scarce data” when integrated with DOE [3].

This enables organizations to:

- Modernize older DOE studies

- Improve existing processes without repeating past trials

- Optimize under new goals

- Reduce development time

ML-DOE can accelerate innovation without starting from zero.

Benefits of ML-DOE: Why Modern R&D Is Moving Beyond Classical DOE

Machine Learning Driven DOE offers several decisive advantages that make it far more efficient and insightful than traditional experimental approaches. Evidence from peer-reviewed literature confirms substantial gains in accuracy, speed, and resource efficiency when ML is integrated with DOE.

- Fewer Experiments, Faster Optimization

Active-learning and ML-guided experiment selection can significantly reduce the number of required experiments while improving model fidelity. Bayesian optimization routinely outperforms manual or grid-based exploration.

- Models Nonlinear and High-Dimensional Systems Automatically

ML-DOE can map nonlinear relationships and variable interactions that classical DOE struggles to capture. This leads to more accurate insights and more robust optimization.

- Real-Time Adaptive Learning

ML-DOE updates its predictions after every experiment, focusing exploration where it matters most. Adaptive ML-driven experimentation has been demonstrated in autonomous chemical synthesis systems and in data-driven reaction optimization.

- Lower Cost, Lower Risk

Recent advances incorporate cost, time, reagent use, and safety constraints directly into Bayesian optimization frameworks. This ensures the experimental strategy is efficient not only scientifically but also operationally.

- Leverages Historical Data for a Head Start

ML-DOE can ingest old DOE data, past pilot runs, or archived experiments, enable faster convergence, and prevent duplicated efforts.

- Proven Across Industries

ML-DOE has shown measurable success in materials science, pharmaceuticals, chemical synthesis, robotics, and manufacturing, positioning it as a modern standard for intelligent experimentation.

Conclusion

Machine Learning Driven DOE represents the next evolution in experimental strategy. By combining the structure of classical DOE with the adaptive intelligence of ML, it transforms experimentation into a smarter, faster, and more resource-efficient process. ML-DOE reduces the number of required experiments, speeds up optimization, and uncovers deeper insights into complex systems.

Its proven success across multiple industries demonstrates its real-world impact. ML-DOE doesn’t just help you design experiments; it helps you learn your system in real time, adapt continuously, and reach optimal results with far fewer trials.

References

[1] Fontana, R., A. Molena, L. Pegoraro, and L. Salmaso. “Design of Experiments and Machine Learning with Application to Industrial Experiments.” Statistical Papers (2023). https://doi.org/10.1007/s00362-023-01437-w

[2] Arboretti, R., R. Ceccato, L. Pegoraro, L. Salmaso, et al. “Machine Learning and Design of Experiments for Product Innovation: A Systematic Literature Review.” Quality and Reliability Engineering International (2021/2022). https://doi.org/10.1002/qre.3025

[3] Shields, B. J., J. Stevens, J. Li, M. Parasram, F. Damani, R. P. Adams, and A. G. Doyle. “Bayesian Reaction Optimization as a Tool for Chemical Synthesis.” Nature (2021). https://doi.org/10.1038/s41586-021-03213-y

[4] Hickman, R. J., Z. Shang, et al. “Cost-Informed Bayesian Reaction Optimization.” Digital Discovery (2024). https://doi.org/10.1039/D4DD00225C

[5] Granda, J. M., L. Donina, V. Dragone, et al. “Controlling an Organic Synthesis Robot with Machine Learning to Search for New Reactivity.” Nature (2018). https://doi.org/10.1038/nature25978

AI PlantOps

AI PlantOps was built to bridge this gap. It fuses domain intelligence, machine learning, symbolic reasoning, and physics-based validation into one interconnected operating system. The result: a new paradigm of AI PlantOps — where every section, cluster, and workflow is digitally traceable, intelligently optimized, and seamlessly connected.

Book a Demo →Dx. Consulting Services

Our strength lies in the fusion of deep consulting experience, process domain expertise, and digital execution excellence. This rare combination enables us to go beyond traditional digital transformation.

Book a Meeting →Agentic AI Services

Knowledge Graph as a Service (KGaaS) is a scalable, agent-driven platform that transforms siloed, unstructured, and structured industrial data into a semantically connected, intelligent knowledge network. Built on industry standards and ontologies, the platform enables next-gen applications in root cause analysis, process optimization, SOP automation, and decision augmentation.

Book a Meeting →FAQs

Q: What is the main difference between classical DOE and ML-DOE?

A: Classical DOE uses a fixed, pre-planned set of experiments. ML-DOE adapts experiment selection dynamically after each run.

Q: Why is ML-DOE especially useful today?

A: Modern systems are complex, nonlinear, and costly to test. ML-DOE handles complexity and reduces experimental load significantly.

Q: Does ML-DOE always outperform classical DOE?

A: Not always does performance depend on data quality, noise, and system complexity. Classical designs still have value in some cases.

Q: What kinds of ML techniques are used?

A: Surrogate models (Random Forests, Gaussian Processes), Bayesian Optimization, and active learning methods.

Q: Can ML-DOE use historical data?

A: Yes, it can ingest archived experiments and use them to guide new experiments more efficiently.